Autoblow

Autoblow AI+

25+ Blowjobs Experiences

Value Budget Option

VacuGlide

Suction Stimulation

Best Feeling Guaranteed

Sleeves

Autoblow AI+

25+ Blowjobs Experiences

Value Budget Option

VacuGlide

Suction Stimulation

Best Feeling Guaranteed

Our brain controlled sex toy experiment offers a preview of what the future may hold. Whether used to grant greater sexual autonomy to people who cannot move or simply to experience pleasure, brain computer interfaces will change life as we know it.

This body of work details the creation and validation of a brain computer interface (BCI) for controlling an adult toy without any overt movement of the body. With an eye towards clinical populations that have limited volition towards their sexual health, the objective of the prototype was to demonstrate the feasibility of an application that can translate mental commands as derived from a brain imaging device and its efficacy in translating those commands into functional usage of a masturbatory appliance.

In our prototype we utilized two pieces of existing hardware, an electroencephalography (EEG) headset and an Autoblow AI+.

This project was commissioned by Very Intelligent Ecommerce Inc. with the goal of commercializing the related technology. The authors of this paper have chosen to remain anonymous due to potential negative career impacts based on the sexual nature of the commissioned work.

Brain-computer interfaces (BCI) create a system for communication between a computer and brain functionality, often with the use case of controlling external devices. Many different imaging modalities can be utilized to read brain signals to create such neurofeedback paradigm, such as electroencephalogram (EEG), magnetoencephalogram (MEG), functional magnetic resonance imaging (fMRI), and functional near-infrared spectroscopy (fNIRS). Among these modalities, EEG is the most common and cost effective method to record brain signals by detecting changes in electrical activity of the brain as recorded from electrodes placed against the scalp.

Individuals with locked-in syndrome (LIS) serve as just one example of the many types of patients who could benefit from commercializing BCI sexual pleasure devices. People with locked-in syndrome perceive the world around them but have an inability to communicate or move due to a complete or near-complete paralysis of muscles. Brain computer interfaces (BCIs) have been proposed as a means to allow LIS patients to interact with the external world using only brain activity. EEG based BCIs take many forms, with motor imagery (MI) being the most suitable and practical for LIS patients. As is the case with many clinical populations, sexual health of LIS patients has historically not garnered much research.

High-performing BCIs are often restricted to carefully-controlled, noise-free, artificial environments, and are heavily dependent on expensive medical-grade systems. To that end, this project looked at the viability of creating an accessible BCI prototype for controlling an adult toy by utilizing relatively lower cost commercially-available neuroimaging equipment.

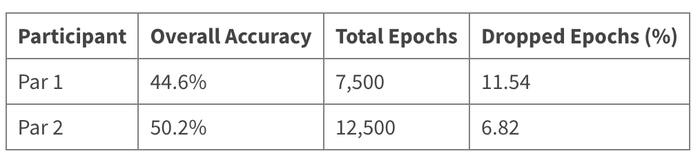

Two adult male subjects (26±1.5 years) participated in the study. Each participant completed between 12 and 20 sessions. The participants did not have any psychological disorders and normal visual acuity. In addition, all participants were not on any medication for at least the last 30 days. The participants claimed themselves having no muscle pain or related disorders.

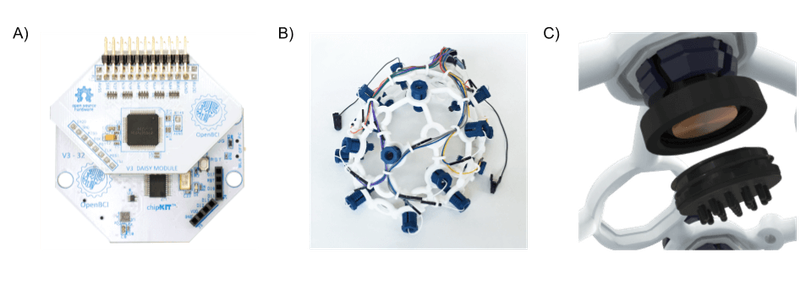

An OpenBCI Cyton-Daisy (16 channels) Biosignal acquisition system, two channels of which were utilized for electrooculography (EOG) as point voltages sampled at a rate of 125 Hz wirelessly (low-energy Bluetooth).

OpenBCI UltraCortex Mark IV headsets were used and set up in accordance with manufacturer instructions, with two additional straps, one under the chin and one under the inion. Prior to data collection the headsets were adjusted for participant comfort and signal quality, using the OpenBCI GUI to check signal quality and impedances (i.e., none railed and impedances less than 100 kOhms).

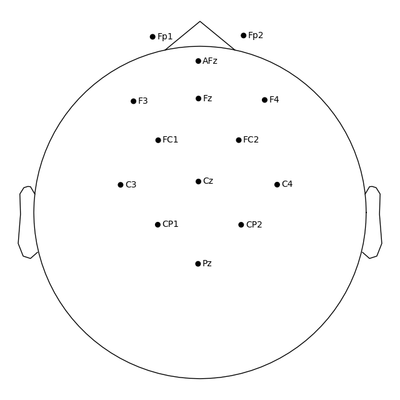

The electrodes used were Active ThinkPulse electrodes with standard length spike tips. One ear clip was used for reference and one for bias correction. Two bi-polar EOG channels were used, the vertical channel above (V+) and below the right eye (V-), and the horizontal channel on the outer corner of the left eye (H-) and right eye (H+). The skin at the EOG and earclip sites was cleaned beforehand with alcohol wipes, and participants with pierced ears removed their earring(s) before ear clip attachment. Using the 10-20 coordinate system, electrodes were positioned about the motor cortex (Fp1, Fp2, AFz, F3, Fz, F4, FC1, FC2, C3, Cz, C4, CP1, CP2, Pz) insofar as the UltraCortex Mark IV’s rigid electrode positions would allow. In order to improve signal quality, participants with long hair tied their hair into a low ponytail, and their hair was parted under each electrode. A layout of the electrodes can be seen in Figure 1.

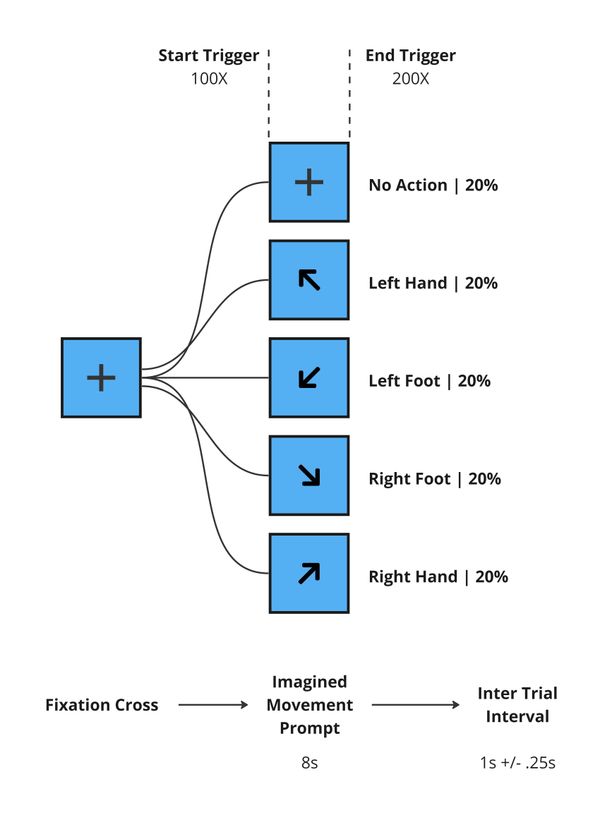

To allow for sufficient end-user functionality for our prototype, we opted for a 4-class classification paradigm, wherein each class corresponded to a distinct motor imagery (MI) of one of the four extremities. In other words, the task that participants completed was to alternately “imagine” moving each of their hands and feet independently, including left hand (LH), right hand (RH), left foot (LF), and right foot (RF).

Each trail started with the user visually attending to a fixation cross, coinciding with a programmatically embedded event trigger the fixation cross was replaced by a directional arrow prompting the participant to imagine movement of one of their extremities. Imaged movement prompts were present for 8s with an intertrial interval (ITI) of 1s +/- 0.25s between all trial types. Rest trials were interspersed throughout the MI trials. A layout of the different trials and timing can be found in Figure 3 below.

Each session consisted of 25 trials of each MI-class and 25 rest trials all of which were randomized.

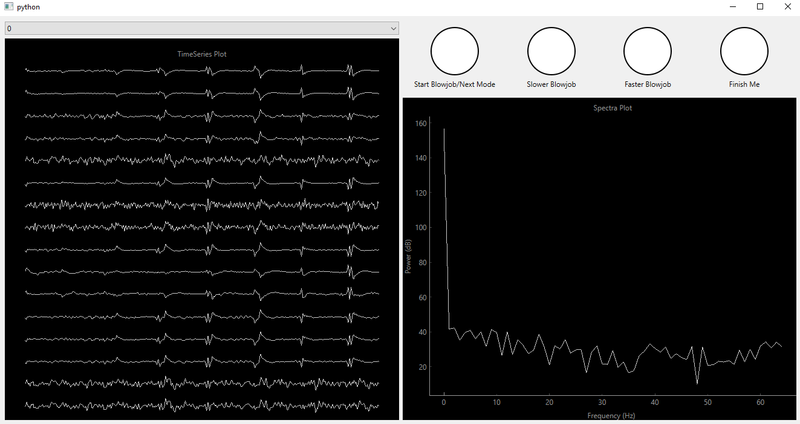

A custom Python script (PyQt6, Brainflow, and MNE) run on a Windows 10 machine was used to present MI prompts, collect biosignal data, and synchronously embed event markers.

To ensure the later discussed functional prototype could be implemented with real-time data, we maintained a relatively lightweight preprocessing pipeline, capable of being run in less than 1s on any given sample to avoid any delays in the user experience.

We initially filtered the data with a bandpass between 5 and 16 Hz, ensuring that the conventional sensorimotor µ-rhythm (8 Hz - 13 Hz) was conserved. We used a one-pass, zero-phase, non-causal windowed time-domain design (finite impulse response) hamming window with 0.0194 passband ripple and 53 dB stopband attenuation and a filter length of 1.6 s.

This filtering stage served many purposes, namely removing noise from a number of sources, including power lines and drift across the session due to perspiration.

After this stage, any channels that were deemed bad were interpolated and EOGs artifacts if found were removed using ICA template matching. If after all other automated and semi-automated filtering, there persisted visually discernible noise, additional data spans were manually annotated for exclusion from the remaining analysis pipeline.

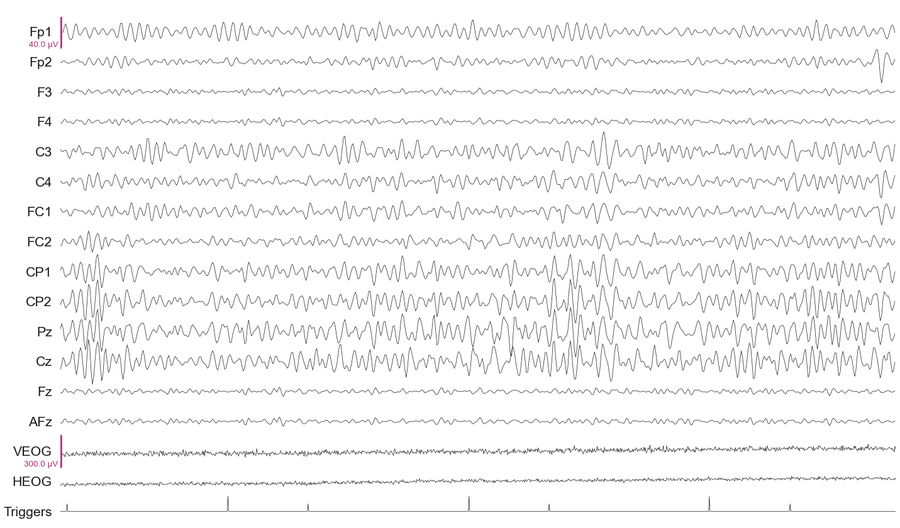

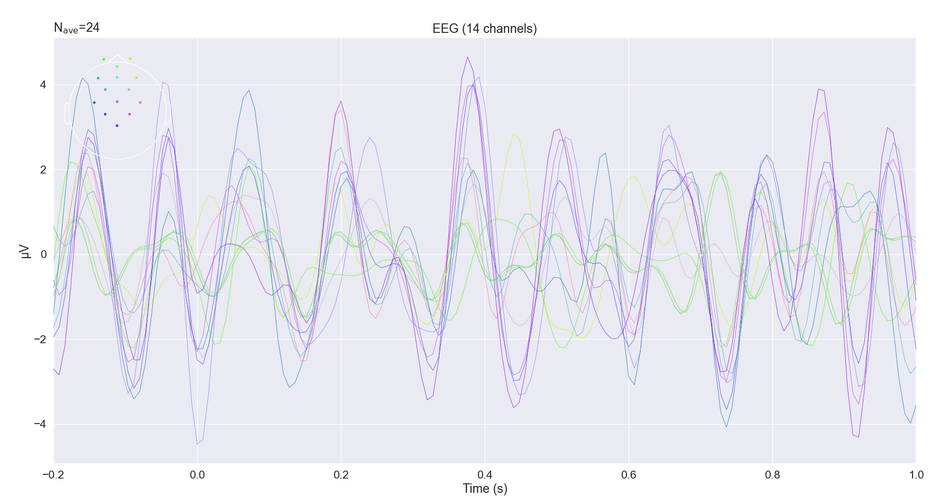

From here data was then split into “epochs” or time-locked windows around the event markers. Figure 5 conveys what the average LH trials looked like across all channels, which is generated via a point-wise averaged across all LH trials, from within a single session. Although further analysis is more state-focused than on discrete time-locked events, this visualization serves to give individuals new to the domain of computational neuroscience a sense of how data is structured.

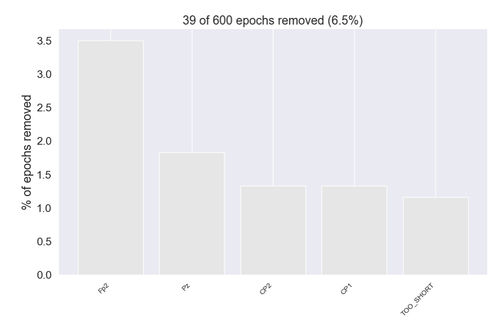

Epochs that exceed a peak-to-peak amplitude of 100e-6 (100 µV) were rejected.

It is worth noting that the 8s duration per trial allowed us to substantially increase the total number of data points in our training dataset by breaking each original epoch into several overlapping subset epochs (4s in duration).

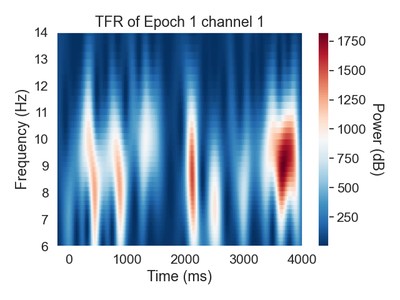

To extract frequency information from our 1-dimensional epoched voltages above we computed spectrograms using morlet wavelet decomposition for each trial individually. Using a logarithmic frequency distribution we focused on the conventional sensorimotor µ-rhythm frequencies.

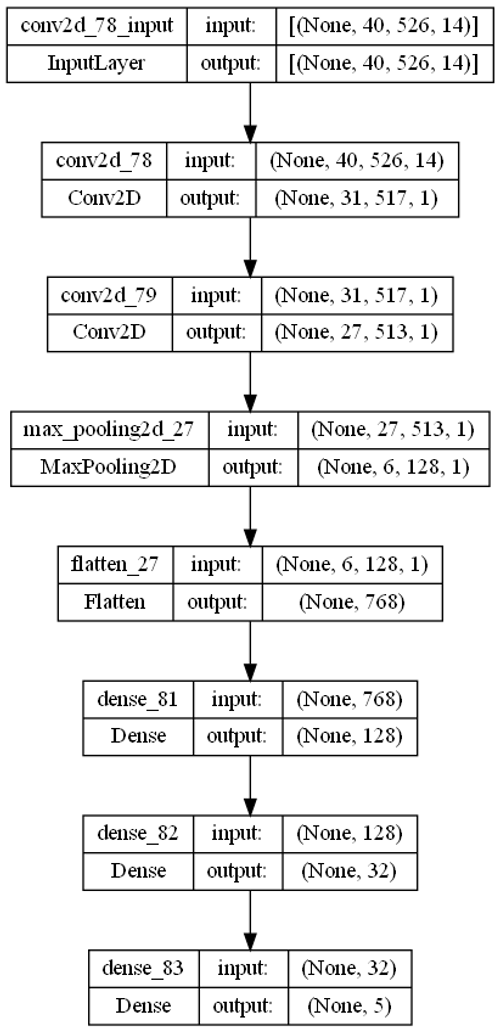

We created a deep convolutional neural network with an input layer that took in 14 of the aforementioned spectrogram images for a given trial of interest. Each image corresponded to the signal from each EEG electrode. The output layer of the model consisted of five floats corresponding to the four imagined movements and a fifth rest classification, these five floats add up to 1.

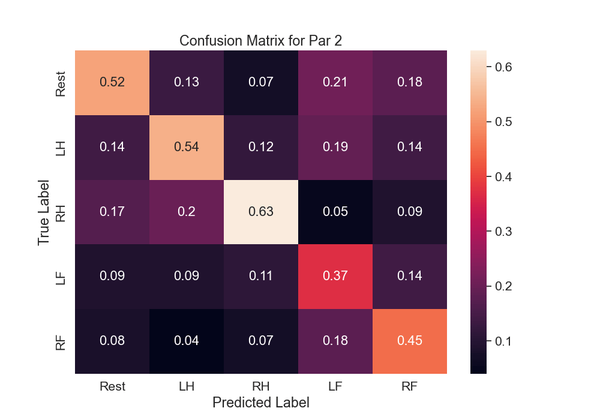

We have included the confusion matrix, denoting the within class accuracies for participant #2 in Figure 9, wherein each column sums to 1. Considering the nature of the 5-class classification we were able to achieve a modest accuracy for each of the 4 target classes (LH, RH, LF, RF). The confusion matrix details the accuracy of a model by comparing the ground truth classification with the model output. If the model we created was random we would expect each voxel to have an even weighting of approximately 0.20, but instead we see an accurate classification of imagined movement of the RH 64% (LH: 54%, LF: 37%, RF: 45%) of the time based on a given chunk of brain data.

Our analysis pipeline was implemented in real time alongside a filtered time series, spectrograph, and model output widgets. When the model output contained one of the four target motor imagery actions above a 0.8 certain threshold one of the command circles would flash red corresponding to a predetermined mapping of MI commands to program commands. Concurrently, a web hook issued a command to a paired Autoblow AI+ device.

This prototype demonstrated the feasibility of BCI-enabled adult sexual pleasure devices. Although this project connected a sexual device for men to a brain control interface, the same technology can be used to control any pleasure device with an electrical output to a motor including but not limited to vibrators. For a clinical population who cannot use their hands for sexual purposes, brain-control enabled sexual devices may provide an important outlet for sexual autonomy, pleasure, and expression.

*In future iterations there is the possibility of allowing the user to issue commands via cognitions that are more erotic in nature. However this would require novel datasets to ensure viability. Furthermore erotic cognitions would likely have to be body-based for the present motor imagery paradigm to translate.

**Cost considerations for the present project led to a very limited participant pool which necessitated creating a model from individualized datasets; there is potential to leverage an expanded dataset and create a generalizable model that doesn’t require several hours of data collection per user. This generalizable model would allow the end user to spend a fraction of the time calibrating the model to their particular brain activity.